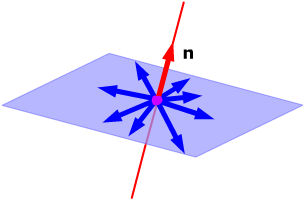

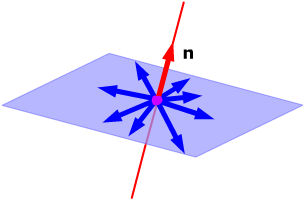

Suppose you have a 2-dimensional subspace of Euclidean 3-space, i.e. a plane through the origin with some normal vector n. All the vectors in 3-space orthogonal to this plane must then be parallel to n, so they form a 1-dimensional subspace of 3-space.

This sort of relationship also works in general inner product spaces - the vectors orthogonal to all vectors in one subspace always form another subspace.

|

| Proof. This is easy to check: look at the closure rules. |

| If u and

v are orthogonal to all vectors in W

, then for every vector w in W,

|

| If u is

a vector orthogonal to all vectors in W,

then for every vector w in W

and any scalar k, |

This new subspace has a name.

|

The word "complement" comes from the fact that the subspace W⊥ "complements" the subspace W, in the sense that both together give you all of V. More precisely:

|

Proof. (omitted) |

Some other properties of orthogonal complements.

| If W is a subspace of an inner product space V, then |

|

|

Proof. Any vector in both W and W⊥ must be orthogonal to itself. The only vector orthogonal to itself is the zero vector. |

|

|

Proof. (omitted) |

|

|

Proof. (omitted) |

Though orthogonality is essentially a geometric concept, there's a connection between orthogonal complements and the fundamental subspaces of a matrix.

|

||

|

Proof. Denote the rows of A by r1, r2, ..., rm. Any vector w in the row space of A i s a linear combination of r1, r2, ..., rm:

A vector x is in the null space of A if and only if it satisfies Ax = 0, so (since the multiplication Ax is the dot product of x with the rows of A), x is in the null space of A if and only if it is orthogonal to all the rows r1, r2, ..., rm. Then

Thus x is in the null space of A if and only if it is orthogonal to every vector in the row space of A. This says that the null space of A is the orthogonal complement of the row space of A. |

This last result can be used to find a basis of an orthogonal complement of a subspace of Rn if you are given a basis of the space itself.

| To find a basis of an orthogonal complement in Rn |

Given a basis {b1, b2, ..., bm} of a subspace W of Rn |

|

|

That basis will be a basis of W⊥ |