This observation makes it easy to calculate the determinant of a diagonal matrix: its only non-zero elementary product is the product of the numbers on its diagonal. Since that elementary product has a positive sign, the determinant of a diagonal matrix is the product of the numbers along its main diagonal – for example,

.

Recall what we did for the original matrix: for each elementary product, we listed the row and column positions of each entry involved and counted the number of swap needed to transform the list of column positions into the list of row positions. Here's the example again.

| Entry | 3 |

5 |

–4 |

2 |

| Row position | 1 |

2 |

3 |

4 |

| Column position | 3 |

4 |

2 |

1 |

| 1. 3 ↔ 1 produces | 1 |

4 |

2 |

3 |

| 2. 4 ↔ 2 produces | 1 |

2 |

4 |

3 |

| 3. 3 ↔ 4 produces | 1 |

2 |

3 |

4 |

| Total number of swaps: 3 | ||||

We could just as well have swapped column positions to transform the list of column positions into the list of row positions: you use exactly the same swaps, but in the reverse order.

| Entry | 3 |

5 |

–4 |

2 |

| Column position | 3 |

4 |

2 |

1 |

| Row position | 1 |

2 |

3 |

4 |

| 1. 3 ↔ 4 produces | 1 |

2 |

4 |

3 |

| 2. 4 ↔ 2 produces | 1 |

4 |

2 |

3 |

| 3. 3 ↔ 1 produces | 3 |

4 |

2 |

1 |

| Total number of swaps: 3 | ||||

There are the same number of swaps, so it really doesn't matter if we start with row positions or column positions. This works in general, for any number of swaps and for any square matrix.

But then, finding the signs for the elementary products of the transpose matrix by starting with columns is the same as finding the appropriate signs for the elementary products of the original matrix by starting with rows. Each elementary product has the same signs as before. So the matrix and its transpose have the same signed elementary products, and thus the same determinant. We conclude that the determinant of a matrix equals the determinant of its transpose: for any square matrix A, det(AT) = det(A).

.

When you calculate this determinant, each elementary product contains exactly one entry from this row, and so contains a sum:

(a + p)ei + (b + q)fg + (c + r)dh - (c + r)eg - (a + p)fh - (b + q)di.

Expand each term and then collect together all terms which came from the first number in one of the original sums, and all the terms which came form the second number in one of the original sums:

{aei + bfg + cdh - ceg - afh - bdi} + {pei + qfg + rdh - reg - pfh - qdi}.

The result is two determinants identical except for one row - one determinant has the first numbers from the sums in that row and the other has the second numbers form the sums in that row.

.

This calculation generalizes to any size determinant and can be used with columns as well.

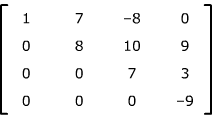

Proof: Suppose first the matrix is upper triangular. Most elementary products contain at least one zero and so are 0. Search for ways you can get a non-zero elementary product.

You must take a number from each column.

For column 1, the only possibility is the number in row 1.

For column 2, you can't choose the number in row 1, since you've already used a number from row 1, so you must choose the second number in row 2, since all other numbers in that column are 0.

And so on: the only way you can possibly get a non-zero elementary product is to take the product of the numbers down the main diagonal. (And if any of those is 0, you can't get any non-zero elementary products.) The sign of this elementary product is +, so the determinant is the product of the numbers down its main diagonal.

For a lower triangular matrix, the same basic idea works; just look at which rows you can choose your numbers from.

The Formal Definition of a Determinant |

|||

| Introduction | The formal definition of a determinant | Determinants of special matrices | Using row and column operations on determinants |